Gen AI Cost Structures & Pricing Strategies Part 2: Edge Computing

One of the most exciting developments in Generative AI is that, in our continuous progress, advancements have gotten predictably better with scale and are, “...nowhere near the point of diminishing.” However, while this notion fuels enthusiasm in new, cutting-edge capabilities, there is an arguably equally impactful implication that receives less mainstream hype: As we improve our compression of AI models, previously-cutting-edge capabilities can be stored in local hardware, thus returning to the “Zero Marginal Costs” model that’s defined Silicon Valley.

In 2024, especially with the mainstream release of Apple Intelligence, Gen AI Edge Computing has entered the spotlight. Along with its unique benefits of enhanced privacy/security, reduced latency, and offline accessibility (which is critical in various B2B contexts), Edge-Hosted AI models incur greatly reduced marginal costs, analogous to that of traditional technology software.

As a result, in the continuously evolving landscape of Gen AI B2B products, the emergence of Edge Computing will return pricing for many edge-powered Generative AI products and features back to traditional industry standards.

Additionally, for the many Gen AI products that will leverage both Cloud-Hosted and Edge-Hosted AI Models in a hybrid fashion, companies will create product categories comparable to existing ones with distinct cost structures and pricing strategies. Such strategies include cloud-powered Gen AI products that offload data processing and query subsets onto the edge, free edge-powered Gen AI offerings with paywalled Cloud-Hosted models, and products that start out unprofitable until Edge-Hosted AI models advance.

This article is Part 2 of Carya’s series on how the rise of both Agentic Gen AI and Gen AI Edge Computing will impact cost structures and pricing strategies. For more about how Agentic Gen AI will accelerate an already occurring trend towards pricing based on measurable business outcomes, reference Part 1.

Silicon Valley’s Zero Marginal Costs Model – Origin & Pricing Implications

Zero Marginal Costs Origins

Silicon Valley rose to economic prominence due in part to the “Zero Marginal Costs” model that many of the area’s products have followed since its inception. Silicon chips, which serve as the brains behind most modern electronic devices, are a prime example of this.

To develop silicon chips, companies had to invest in significant upfront costs that went towards fabrication facilities, specialized manufacturing equipment, and R&D. However, once these fixed costs were incurred, the variable costs that went into the chips’ materials themselves were practically negligible, since the primary raw material for chips is silicon, which is abundant and relatively inexpensive.

This is in stark contrast to traditional physical product manufacturing, in which more expensive raw materials and production-labor often result in a substantial increase of a product’s total variable costs per revenue each unit gains. As a result of silicon chips’ reduced variable costs, these products were uniquely profitable, helping this sector’s economy flourish.

This paradigm became substantially more exaggerated with the proliferation of software that sat on top of products’ hardware layers. While silicon chips were cheaper to manufacture per-unit than traditional physical products, software didn’t involve any per-unit physical materials, at all (excluding cloud hosting costs when applicable). In fact, unlike even silicon chips, software did not require physical distribution, allowing it to be replicated and “shipped” to users without the supply chain considerations that are significant to other goods.

Of course, the utility of technology chips and software cannot be understated. However, these offerings’ virtually negligible marginal costs are a large reason why they are among the most profitable segments of the world’s economy.

Evolution of Pricing Strategies

Originally, the software industry adopted the same one-time fixed pricing strategy of traditional physical products, since pricing initially tends to mimic what buyers are familiar with (especially within B2B). This initial mimicry allowed new product categories to establish a foothold in the market before transitioning to more innovative pricing strategies that, while capturing the product’s true value, would have been viewed skeptically by buyers before the product’s perceived value had been broadly established.

However, in time, pricing shifted to reflect software’s zero marginal cost reality. Namely, a resultant development of software’s free distribution is the ability for companies to seamlessly update their products without shipping users a new physical version (or packaging) of said product. In turn, this ushered in a subscription pricing model that has since become the industry standard. Traditional software’s shift from fixed pricing to Subscription-Based pricing is emblematic of the need for companies to justify the business value they produce. For more on this, reference Part 1.

Consider Adobe Photoshop, for instance. Originally, Photoshop released new versions of their photo editing software, every two years on average. Although each version was a significant improvement on the previous one, this software was not hosted on the cloud, but instead a static storage device. Thus, despite Adobe being able to innovate their product and continuously fix bugs in Photoshop in between these lengthy release cycles, these improvements could not be shipped to the customer until the next version’s release.

In contrast, once Adobe started hosting Photoshop on the cloud, when an improvement was made to the product, that fix or new feature could instantly be delivered to the user without them paying for a net new product to receive these benefits.

However, with this new ability, Adobe was now investing in continuous work without any additional revenue gained. In order for these perpetual development costs to be economically justifiable, Adobe had to shift to a new, Subscription-Based pricing strategy. By charging a monthly per-user fee for access to Photoshop, users now got the latest improvements to the product as soon as Adobe could develop them.

Additionally, this pricing strategy proved immensely profitable, as companies now received continuous recurring revenue streams from their users without waterfall marketing and release moments. This is known as the Software-as-a-Service, or SaaS, pricing model, which is now prevalent in B2B Software.

As time went on, further evolutions to B2B software pricing occurred. Namely, in order to attract new customers, many pieces of software applied a “freemium” pricing model, which adds a basic, free tier for their products which can be upgraded to a Subscription-Based premium model (and in some cases, various tiers of premium pricing models that progressively add more features).

Recently, in an effort to ensure B2B decision-makers that products provide measurable value to justify purchasing them, companies have once again shifted to a “consumption-based” pricing ([Usage/Outcome]-based) model, which correlates the value or usage users receive from products to how they’re priced. For more on this development, reference Part 1.

Cloud-Hosted Generative AI’s Re-Introduction of Marginal Costs

Due to the compute that Cloud-Hosted Generative AI products require on a variable basis, when the AI models that power these products are hosted on the cloud, they incur significant marginal costs. Thus, the rise of Generative AI has effectively done away with Silicon Valley’s “Zero Marginal Costs” model and the relative ease-of-profitability that comes with it.

The following sections of this article argue that, due to Gen AI products powered by Edge Computing returning to Silicon Valley’s “Zero Marginal Costs” model, pricing strategies for Edge-Hosted Gen AI products will normalize back to the pre-Gen-AI era, as well as result in various creative pricing strategies for products powered by both cloud and edge hosted models.

Gen AI Edge Computing – Returning Pricing to before Generative AI

Generative AI - Edge Computing Overview

Generative AI Edge Computing has two variants: “Locally-Hosted” and “At the Edge of the Network”. This article will primarily involve the former, defined by AI models being stored directly within the hardware that sources its Inference’s input data.

While Generative AI models themselves generally go through Training (the computationally expensive process of ingesting data into, and subsequently creating/fine-tuning an AI model) on cloud servers, Inference (which involves the actual execution of user inputs by said AI model) can take place on a user’s local device. These local-device models are known to be Edge-Hosted.

Since the AI model needs to both fit on a local device (which often has significantly less storage and compute capabilities than cloud servers), the capabilities of Edge-Hosted AI models are considerably less than that of cutting-edge AI models hosted on the cloud.

Still, Edge-Hosted AI models present numerous benefits for the user, including greatly reduced latency (since the data’s required travel time is negligible), offline capabilities, and privacy/security. For more on this, reference this article.

Edge-Hosted Generative AI’s Return to Zero Marginal Costs

However, arguably the most impactful benefit of Gen AI Edge Computing is not for the user, but for the companies implementing Generative AI into their products: Since Gen AI’s expensive compute operations are executed using the electricity/power of a local device, Inference costs are all but removed. As a result, the significant marginal costs of Gen AI are mitigated, returning these products to Silicon Valley’s “Zero Marginal Costs” model.

The below diagram illustrates Gen AI Edge Computing’s benefits in the context of Apple Intelligence, which dynamically routes user prompts to either Edge-Hosted on-device AI Models, Apple’s own Server Models, or 3rd Party “World Models” such as OpenAI’s GPTs (for more information about Apple Intelligence’s application of Edge Computing, reference this article).

The use of Subscription-Based, Usage-Based, and Outcome-Based pricing reflects edge-powered Gen AI products’ return to zero marginal costs. Additionally, for many products that employ both Edge Computing and Cloud-Hosted models (like Apple Intelligence does), creative pricing models will leverage pieces from all 3 of these strategies.

Generative AI Hybrid Processing via Edge Computing

When this article mentions “hybrid processing” of both Cloud-Hosted and Edge-Hosted Gen AI, it’s referring to two distinct concepts. The first regards a single product leveraging both Edge-Hosted AI models (on a user’s local device) and Cloud-Hosted AI models depending on the specific use case. This concept will be outlined later under “Edge-Hosted & Cloud-Hosted Gen AI Hypotheses”.

However, there is also an alternative method of processing AI Inference with both Cloud-Hosted and Edge-Hosted Gen AI in which any AI model (even the latest, cutting-edge models that cannot be fully processed locally) is utilized via both the cloud and local devices. This Edge Computing strategy involves a layer of infrastructure that splits the same AI models’ processing onto idle local-device compute and cloud-server compute, including various startups that claim to reduce Inference costs significantly.

If this infrastructure technology is truly effective on a large scale, AI model providers will likely provide it in-house (in addition to startups that sell this infrastructure) due to their incentives to reduce marginal costs. The cost structure implications of these innovations involve greatly reduced marginal costs of these “partially-Cloud-Hosted” AI models, potentially lessening the benefits of fully-Edge-Hosted models’ zero marginal costs.

As a result, in the following sections, it is safe to assume that the listed impacts of Gen AI Edge Computing also apply to partially-Cloud-Hosted AI models (which enable greatly reduced marginal costs, rather than Edge Computing’s zero marginal costs) to a lesser extent.

Subscription-Based Pricing

Impact of Cloud-Hosted Gen AI

Just as deterministic software’s initial pricing strategy reflected that of the pricing paradigms before it – appealing to buyers’ expectations despite not fitting its cost structure’s realities – so too did Generative AI products’ initial pricings mirror that of deterministic software (i.e. monthly per-user subscription models).

In fact, many prominent Generative AI products still utilize monthly Subscription-Based pricing for both simplicity and comfortability. Especially for consumers and smaller businesses, subscriptions are an easily understood pricing model, and thus more digestible.

However, since Generative AI products incur more marginal costs than deterministic software, these prices have, on average, been significantly higher than typical software SKUs. For example, take a typical software SKU, Microsoft 365 Business Standard, which is sold for $12.50/user/month.

This bundle includes 10 different pieces of software, including the core Microsoft Office products (Word, Excel, PowerPoint), a full communication platform (Teams), an full email platform (Outlook), a file storing platform (OneDrive), a video editing platform (Clipchamp), and a Notion-like collaboration platform (Microsoft Loop), among other products. All together, this bundle gives most businesses practically every piece of software they need to function.

Still, the Microsoft 365 Copilot add-on, which integrates Generative AI into the aforementioned products (though is not essential towards a business’s functions), is priced at 2.4x times that bundle at $30/user/month. While businesses are willing to pay for Microsoft 365 Copilot, this pricing is informed in part by the marginal costs required to run it. Especially for startups that do not have diversified revenue streams, a ubiquitous brand presence, and large cash reserves, this type of pricing will be prohibitive for many of their prospective customers.

Impact of Gen AI Edge Computing

Since Edge Computing will decrease Gen AI products’ marginal costs towards a level similar to non-Gen AI software, subscription costs for products powered by Edge-Hosted AI models can go down considerably while still being economically feasible for startups and big companies, alike. Thus, especially for Gen AI product pricing models targeted at consumers and smaller businesses, subscription models will likely remain prominent.

Usage-Based Pricing

Impact of Cloud-Hosted Gen AI

Usage-Based pricing will, for the purposes of this article, refer to the subset of consumption-based pricing models which charge customers by the variable amount of input usage they perform, independent of any successful business outcomes in which this usage results. For example, if a Gen AI product charges users based on how many queries they input (irrespective of whether the output response is satisfactory), this is Usage-Based Pricing.

Similar to how cloud-hosting providers like AWS and Azure employ Usage-Based pricing, Gen AI model providers’ (like OpenAI and Anthropic) B2B APIs also charge based on consumption. This pricing model has often been passed down to consumers of applied Gen AI products, though has been shifting towards Outcome-Based pricing due to that model’s incentives aligning with customer value. For more on this, reference Part 1.

Impact of Gen AI Edge Computing

Regarding Gen AI Edge Computing, since these products do not incur significant marginal costs, the previous patterns and justifications for Usage-Based pricing (that marginal costs were being passed onto the user) no longer apply.

As Edge-Hosted AI models powering B2B Gen AI products become more popular (the infrastructure for which is already being set in place, indicated by recent advancements in local LLM technology that can operate on even CPUs), customers will view costs for these offerings similar to that of traditional deterministic software. Usage-Based pricing for these products, such as Microsoft Word, would be viewed extremely skeptically by customers, who would not only see this strategy as greedy, but also may be disincentivized from using said product. In the near future, such an attitude towards Gen AI edge-powered products will likely arise, as well.

Outcome-Based Pricing

Impact of Cloud-Hosted Gen AI

Outcome-Based pricing, which charges users based on successful, measurable outputs that products produce, has recently increased in prominence before the advent of Gen AI, and has only become more widespread among Generative AI products. This is largely due to the need for products to communicate business value towards their customers.

Especially due to AI Agents, which complete tasks with clearly and easily measurable outcomes, Outcome-Based pricing is expected to rise in popularity as Gen AI matures. For more about Gen AI’s influence on Outcome-Based pricing, reference Part 1.

Impact of Gen AI Edge Computing

Additionally, Gen AI Edge Computing will further this acceleration towards Outcome-Based pricing, as well. For the same reasons stated before about how Usage-Based pricing, due to less marginal costs, will decline as Edge-Hosted Gen AI products become more prominent, this decline will likely be replaced largely by Outcome-Based pricing. Note that some instances of Usage-Based pricing’s removal (due to Edge Computing’s lack of marginal costs) will be replaced by Subscription-Based pricing. However, the general shift of non-Gen AI products to Outcome-Based pricing will ensure that the latter also increases as a result of Edge Computing.

As this article will expand upon in the “Edge Offloaded, Cloud-Hosted” section, in order to ensure that a Gen AI product can produce a successful outcome while still minimizing costs, many products will likely follow a similar structure to Apple Intelligence, handling a Gen AI operation with a local device model when possible, but routing to Cloud-Hosted models (due to their often enhanced capabilities) when necessary.

If products that do this employ either Subscription-Based or Usage-Based pricing, they’d be incentivized to route queries to locally-hosted AI models as much as possible, even if the outcomes produced are less acceptable (since this would minimize costs while revenue remains unchanged). However, by employing Outcome-Based pricing, since the primary incentive of the product is to generate revenue through successful outcomes, pricing and product-quality would be completely aligned, enabling users to access the best experience possible.

Regarding products that are entirely powered by Edge-Hosted AI models, since these pieces of software have similar cost structures to traditional deterministic technology, they will likely continue along non-Gen-AI’s macroeconomically-influenced trend towards Outcome-Based pricing (which is referenced in more detail in Part 1).

Edge-Hosted & Cloud-Hosted Gen AI Hypotheses

As stated earlier, many Gen AI products that leverage Edge-Hosted AI models will do so in a hybrid fashion, also employing Cloud-Hosted cutting-edge AI models when appropriate. Therefore, different parts and/or variations of the same products may incur significant marginal costs, while others do not.

This cost structure is a unique phenomenon for software products, which will likely result in unique resultant pricing strategies that take from pieces of the three aforementioned pricing categories (Subscription-Based, Usage-Based, and Outcome-Based).

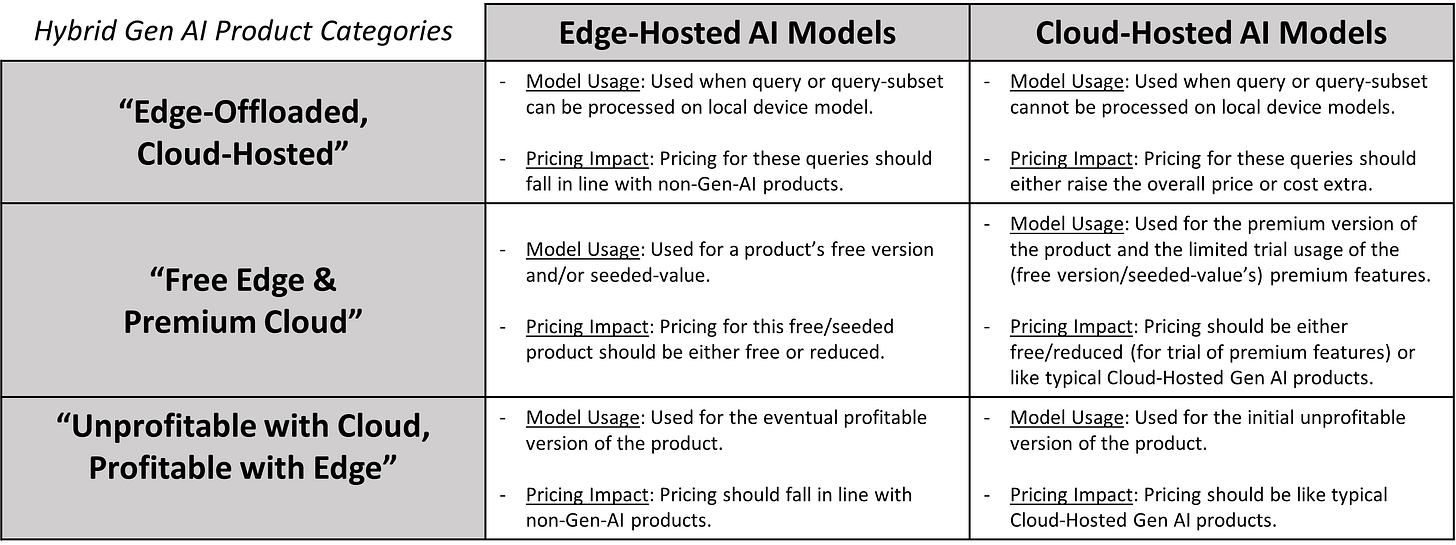

The remainder of this article dives into three hypotheses for how companies will incorporate hybrid approaches to Edge-Hosted and Cloud-Hosted Gen AI products, as well as the resultant pricing strategies that stem from these implementations.

These strategies include Gen AI products that route queries and tasks to Edge-Hosted AI models when possible (using Cloud-Hosted AI models as a costly, yet more capable fallback when not), free edge-powered Gen AI offerings with paywalled cloud-powered capabilities, and products that may be initially unprofitable until Edge-Hosted AI models advance to match current Cloud-Hosted models’ abilities.

Below is a table of the aforementioned hypothesized hybrid Gen AI product categories which details how these products will both leverage and be impacted by Edge-Hosted and Cloud-Hosted AI models:

“Edge Offloaded, Cloud-Hosted” Products

Gen AI products that fall under this category are nothing new. Even among different Cloud-Hosted AI Models, generally, more capable models cost more per-query. As a result, many existing Gen AI products employ Model Routing to optimize their costs, having an LLM prompt be processed by the least computationally expensive AI models required to successfully perform a Gen AI use case.

For example, in Microsoft 365 Copilot products, we know that simple LLM-generated summarization use cases can be successfully performed by GPT-3.5, which is more cost effective than GPT-4. Thus, in order to optimize costs, if an LLM-prompt is identified as a summarization use case, LLM Cascading will ensure that GPT-3.5 handles the query, since routing this prompt to GPT-4 would incur unnecessary increased costs.

Note that Model Routing can also be employed to optimize for output, leveraging models that maximize the response quality depending on what prompt is inputted, independent of costs. For more information on Model Routing in the context of Cost Optimization, reference this article (and to learn more about advanced Model Routing to maximize performance, see this section).

Regarding Gen AI Edge Computing, these “Edge Offloaded, Cloud-Hosted” products follow the same pattern: When a Gen AI use case (or a subset of that use case) can be successfully performed by an Edge-Hosted model, the product will offload this task to Edge Computing. However, for more complex scenarios, a use case (or a subset of this use case) may require the cutting-edge capabilities of Cloud-Hosted AI models. Only in these cases will the Gen AI product route (via an LLM-powered Orchestration layer) to marginal-cost-inducing Cloud-Hosted AI models.

This structure is found in Apple Intelligence, which leverages a mechanism called LoRa to optimize a single Edge-Hosted LLM for different types of tasks without storing multiple versions of this AI model (for more about LoRa and AI Model Compression, refer tothis section of our Edge Computing article). Thus, Apple Intelligence can handle many types of user queries without routing them to the cloud, despite the model’s relatively small size.

Pricing Implications

Since this category of Gen AI products route to marginal-cost-inducing AI models via an Orchestration layer that identifies prompts’ intents, these products are aware of said intents before they occur. As a result, it is likely that many “Edge Offloaded, Cloud-Hosted” products will be priced in-line with non-Gen-AI products for base (i.e. edge-powered) capabilities, and charge additional fees for premium (i.e. cloud-powered) capabilities.

For smaller business or consumer products, like Apple Intelligence, this may come in the form of a subscription add-on fee. Analysts previously predicted that Apple Intelligence could charge up between $10 - $20 per month for “World Model” routing, which leverages 3rd party models like OpenAI’s GPT, though still offering any Gen AI functionality on its own models for free.

For Enterprises, since they often value flexibility and purchase-justification over simplicity, either Subscription-Based or Outcome-Based pricing will likely be employed. This would be in-line with fully edge-powered AI when offloaded, and charged per “premium outcome” for tasks routed to the cloud.

“Free Edge” & “Premium Cloud” Products

This second category of Gen AI products that selectively leverage Edge Computing will do so in a “freemium” fashion, pay-walling the use of more costly, cutting-edge Cloud-Hosted AI models. This pricing strategy already exists in current cloud-powered Gen AI products, like Perplexity.AI. Perplexity includes a free version of its product that leverages its own proprietary LLM, while “Pro” and “Enterprise” tier users’ queries are powered by more capable (and more costly) 3rd party AI models (such as the GPT and Claude series).

Note that while Perplexity’s standard, free model is Cloud-Hosted, its marginal costs are lower since the model is proprietary. As more capable models living within local devices become more normalized, a product’s free tier that incurs negligible marginal costs but does a relatively satisfactory job (as compared to cutting-edge cloud-powered Gen AI) can be effective as seeded value. Once this product’s utility within a B2B workflow is proven, in many cases up-charging to superior capabilities will be an easy decision, especially if the premium version is previewed to users (such as within Perplexity where users are given 5 free “premium” searches per day).

Additionally, this Gen AI product category does not always just plug in more advanced AI models into the exact same UX, but sometimes makes UX-enhancements that are only possible with the greater capabilities of cutting-edge AI models. Again, Perplexity is a great example of this. Take, for instance, a side-by-side of the free and premium versions of Perplexity’s answer to the same query: “I want to type up a report to my professor about the differences between Carbon Offsets and Carbon Allowances, but do so with a controversial stance that will get their attention, but not come off as if I’m a climate skeptic.”

Free Version

Pro Version

Unlike in the free version, Perplexity’s “Pro Search” displays real-time multi-step reasoning to tackle complex problems, visibly breaking queries down into smaller goals and delivering thorough answers efficiently. Additionally, a recently-released Perplexity Pro feature integrates OpenAI’s o1-preview reasoning model into searches when appropriate, despite incurring significantly higher marginal costs per query as a result.

Pricing Implications

The pricing implications of this category of Gen AI products are fairly straightforward: In order to provide seeded value for both consumers and organizations’ employees, there will be a free, edge-powered version of these products. The free versions should be useful and, importantly, hint at the increased value of a more advanced version. In some cases, these less-capable versions may be appropriate for non-power-users and find durable product market fit. Although not ideal, if these cases do arise, a lower price may be applied to the base versions.

Depending on the Gen AI products, their premium versions’ pricing strategies may be Subscription-Based, Usage-Based, or Outcome-Based, likely trending towards Outcome-Based in line with the industry and proliferation of more Agentic Gen AI use cases.

“Unprofitable with Cloud, Profitable with Edge” Products

Finally, this third category of Gen AI products occurs when, during a product’s initial phase, acceptable customer outcomes can only be attained with Cloud-Hosted AI models. In these cases, companies may not be able to make a profit while leveraging marginal-cost-inducing Cloud-Hosted AI models, but will do so to gain early-market traction. To eventually reach profitability, these companies will rely on Cloud-Hosted technology eventually becoming storable on local devices via Edge Computing, thus eventually having a profitable cost structure.

Like the other Gen AI product categories described above, this phenomenon is not new, but an existing strategy adapted for a new hybrid Gen AI context. Silicon Valley products have historically often adopted a “grow-at-all-costs, become profitable later” model in order to gain initial traction. The companies that do this rely on funding, often by VCs, to support themselves (rather than being “default alive”, which means that a business can sustain itself without relying on external funding), despite burning more money than they make.

A prolific example of this is Uber. Uber was founded in 2009, reached over 122 million users in 2022 (gaining almost $32 billion in revenue that year) and has long been a household name. However, despite evidently being a huge success, the first year Uber turned a profit as a public company was in 2023 (which besides a slight profit in 2018 that a $1 billion funding round helped support, was the first time Uber had turned a profit, at all).

Still, while there are many Silicon Valley parallels to this Gen AI product category, this hypothesis has a couple of caveats. Once cutting-edge AI capabilities can be brought to locally-hosted models, it’s very likely that they will no longer be cutting-edge. More advanced Gen AI capabilities will exist, but once again may only be accessible on Cloud-Hosted AI models. If a Gen AI product is only viable if its capabilities are on the cutting-edge, then it should not position itself in this category.

However, this is not how real-enterprise value works. Just as customer value is not found in inherent usage, but in measurable business outcomes, so too is customer value not inherently tied towards the AI that powers it, but towards the business outcomes that the AI powers. Thus, even if an edge-powered Gen AI product’s capabilities aren’t the most advanced available, that shouldn’t diminish a good product’s customer value.

Additionally, although the “grow-at-all-costs” Silicon Valley model has been prolific and successful, it’s declined as of late, due in part to increases in American and European central bank interest rates. However, the “grow-at-all-costs” startups of the past have primarily sacrificed profitability in the name of customer acquisition costs (CAC) and increased hiring.

While these levers do often increase growth, they can often mask the flaws of products that cannot stand on their own without being artificially inflated. If a product cannot achieve growth organically (instead requiring significant, unsustainable marketing spend), this is likely a flawed product that should improve rather than be amplified.

The “Unprofitable with Cloud, Profitable with Edge” products, conversely, incurs its marginal costs from the product’s technology, itself. Unless these products elect to delay development (and feedback, iteration, etc.), and thus their early market traction, there’s no alternative towards initial unprofitability. Thus, if products can achieve organic growth and stay “default-alive” once technology advances, there’s a considerable chance that being initially unprofitable in the name of early traction is worth the costs and dependency on external funding.

Pricing Implications

Pricing for “grow-at-all-costs”-esque products will likely remain relatively unchanged. Especially within the consumer realm, startups will sometimes undercut the incumbent businesses they’re attempting to disrupt in order to gain market share (despite this being unprofitable), before eventually raising prices once users are dependent on their services. Again, Uber is a prime example of this tactic, becoming significantly more expensive once VCs no longer subsidized them (no longer undercutting the Taxi business they disrupted, largely by initially undercutting them).

However, since this Gen AI product category is unprofitable due to initially-necessary marginal costs (rather than in the name of marketing spend or unsustainable pricing), its pricing will likely not be impacted significantly. If these products increased their pricing to obtain profitability during their initial Cloud-Hosted stage, they would no longer fit into this product category, instead merely optimizing their cost structure as Gen AI gets cheaper.

An area where these product’s cost structure may be impacted is, ironically, that they will often be unable to leverage the traditional “grow-at-all-costs” model. Since “Unprofitable with Cloud, Profitable with Edge” products are already not default alive (initially), incurring additional marginal costs is even less practical than before. Thus, this will necessitate increased discipline in the products themselves, since leaning on VC-backed artificial growth isn’t a feasible route.

Overall, Edge Computing effectively bifurcates Gen AI’s costs into two distinct pricing models: one leveraging cutting-edge AI that necessitates significant marginal costs, and the other – enabled by Edge Computing – mirroring the traditional tech “Zero Marginal Costs” structure that’s helped define the industry.

Not only will Gen AI Edge Computing’s advent lead to markedly different cost structures and pricing strategies, but also various creative product categories that leverage both Cloud-Hosted and Edge-Hosted AI models, each with resultant pricing that mirrors those of today’s products. As LLMs become continuously optimized and compressed to fit on local devices, new pricing realities will be unlocked, making Gen AI’s powerful capabilities accessible to even more consumers and enterprises.